Job Offer Tagging¶

Our mission¶

The mission is to automate job applications for everybody.

Pre-done work¶

I wrote a web-crawler in python to get job description from a German website called https://www.stepstone.de. I did the following steps:

- Crawling all the links pointing on sites that show job offers

- Scraping each job offer with all information available

- Job name

- Company and link to its description site

- Job type (part-time/full-time/freelance etc.)

- Description (Stepstone uses html-parts to divide each section of the job offer)

- Saving all of the divided descriptions in a DataFrame (pandas)

- 1st column: headline

- 2nd column: text

- Cleaning the data

- Reducing the labels from about 5000 to 5 so that we have the following labels:

- company: a description of the company ("About us"-page)

- tasks: a description of the job offered (especially the tasks of the job)

- profile: a description of the skills needed to fulfill the job's requirements ("Your profile")

- offer: a description of what the company offers for the applicant ("What to expect", "What we offer to you")

- contact: contact details for the applicant (also including a hint whom to adress in the application)

- Reducing the labels from about 5000 to 5 so that we have the following labels:

- Classifying job offers

- This step was taken with the goal to separate different parts of job offers

- As seen above, we usually have 5 parts, which we want to classify

- I will not describe the whole process here in detail but if you wish to follow up to this point, you can open the notebook 'job_description_classification.ipynb'

- I built the model that I stored to be able to reuse it

What this notebook covers¶

In this notebook, the goal is to use the trained classification model and predict the parts in the job offer, so we know exactely where to extract what kind of information from.

The data we use¶

We use a dataframe which I scraped from www.stepstone.de. The scraping process is in a separate jupyter notebook.

Pipeline¶

Making imports¶

At first, we need to import the libraries and tools (incl. model) we need to start.

# Make the necessary imports

import pandas as pd

import json

import pickle

from lxml import html

from lxml import etree

from collections import OrderedDict

# Make the necessary imports

with open('../Job_Offers/job_offers.json', 'r') as outfile:

job_offers_json = json.load(outfile)

job_offers = pd.read_json(job_offers_json).sort_index()

model = pickle.load(open("../../anzeigen_model_96.sav", 'rb'))

1. Defining useful functions¶

Cleaning function¶

Because our trained model does most of the preprocessing work, we are just going to erase some strings, we don't want to put in our prediction model.

This cleaning process will be called just before making the prediction.

def cleanText(docs):

new_docs = []

for d in docs:

d = d.replace("\r","")

d = d.replace("\n", " ")

# replace unicodes

d = d.replace(u"\xad",u"")

d = d.replace(u'\xa0', u' ')

d = d.replace(u'\xa4', u'ä')

d = d.replace(u'\xb6', u'ö')

d = d.replace(u'\xbc', u'ü')

d = d.replace(u'\xc4', u'Ä')

d = d.replace(u'\xd6', u'Ö')

d = d.replace(u'\xdc', u'Ü')

d = d.replace(u'\x9f', u'ß')

new_docs.append(d)

return new_docs

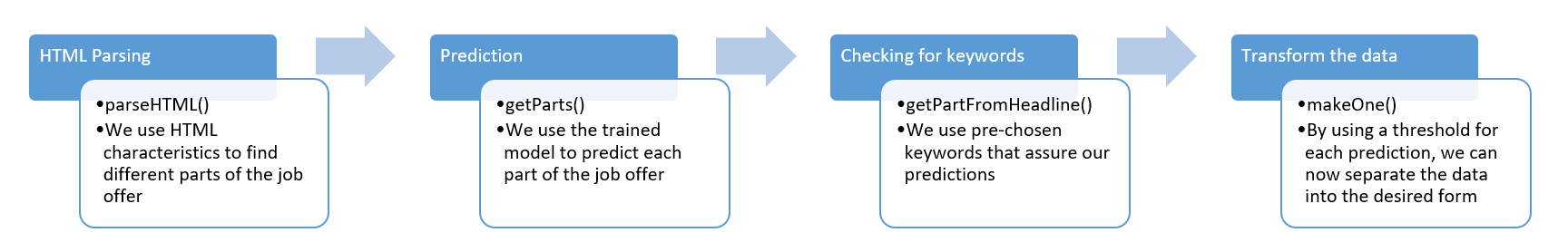

HTML Parsing¶

This already is a very important function to transform the input into useful parts of the job offer. We will only use it when the number of headlines is too small. That tells us when there haven't been a marked part on the website. This means we have to find out on our own where the job offer is divided at.

This is what an example of unstructured job offer can look like:

<div class="card__body">\n<h2 class="card__title color-custom">SII TECHNOLOGIES</h2>\n<div class="richtext ">\n<p><strong>Wir denken</strong> in Technologie. Vom kleinsten Element bis zum virtuellen Raum.<br> <strong>Wir sind</strong> ein weltweit tätiger Technologiedienstleister in den Bereichen:<br> Digital Services | Engineering | Systems & Modules | Precision Parts | HR Services<br> <strong>Wir arbeiten</strong> erfolgreich in einem starken Team - facettenreich, engagiert und kreativ.</p> \n<p>Sie haben ein neues berufliches Ziel? Schlagen Sie mit SII Technologies Ihren Weg ein! Verwirklichen Sie Technik gemeinsam mit uns als <strong>Softwareentwickler (m/w/d) Mobile Applikationen Android</strong> am Standort Augsburg, Dresden, Ingolstadt, Mannheim, München, Regensburg oder Rosenheim.</p> \n<p>Stellen-ID: 13052-ST</p>\n</div>\n</div>\n\n',

'<div class="card__body">\n<h2 class="card__title color-custom">Ihre Aufgaben</h2>\n<div class="richtext ">\n<ul> \n<li>Entwickeln von Applikationssoftware für mobile Endgeräte in Android (Programmierschwerpunkt für Android: Java, Android Studio)</li> \n<li>Erarbeiten der Designspezifikation sowie Dokumentation, Testing und Inbetriebnahme</li> \n<li>Entwicklungsbegleitende Qualitätssicherung</li> \n<li>Weiterentwicklung mobiler Themen</li> \n</ul>\n</div>\n</div>\n\n',

'<div class="card__body">\n<h2 class="card__title color-custom">Ihr Profil</h2>\n<div class="richtext ">\n<ul> \n<li>Abgeschlossenes Studium der Informatik, technische Informatik, Elektrotechnik oder vergleichbar</li> \n<li>Fundierte Kenntnisse in der Entwicklung von nativen Apps im Android-Umfeld</li> \n<li>Gute Kenntnisse im Umgang mit einschlägigen Entwicklungsumgebungen</li> \n<li>Gute Deutsch- und Englischkenntnisse</li> \n</ul>\n</div>\n</div>\n\n',

'<div class="card__body">\n<h2 class="card__title color-custom">Ihre Vorteile</h2>\n<div class="richtext ">\n<ul> \n<li>Stärkenorientierte Mitarbeiterförderung sowie firmeninternes Nachwuchskräfte-Entwicklungsprogramm </li> \n<li>Modern ausgestattete und klimatisierte Arbeitsplätze mit innovativer Technik </li> \n<li>30 Tage Jahresurlaub, flexible Arbeitszeiten sowie leistungsgerechte Vergütung</li> \n<li>Vielseitige Angebote und Leistungen (z. B. VWL, bAV, Kinderbetreuungszuschuss, JobRad, Mitarbeiterrabatte, Events) </li> \n<li>Motiviertes Team in einer offenen und wertschätzenden Unternehmenskultur mit kurzen Entscheidungswegen</li> \n</ul>\n</div>\n</div>\n\n',

'<div class="card__body">\n<h2 class="card__title color-custom">Kontakt</h2>\n<div class="richtext">\n<p>Ansprechpartner bei Fragen:<br> <strong><strong>Frau Claudia Boskovic</strong></strong><br> <u><a href="tel:082129990160" target="_blank">+49 821 29990-160</a></u><br> <u><a href="mailto:jobs@de.sii.group" target="_blank">jobs@de.sii.group</a></u></p> \n<p><strong>SII Technologies GmbH</strong> | <a href="mailto:jobs@de.sii.group" target="_blank">jobs@de.sii.group</a> | <a href="http://www.de.sii.group/" target="_blank">www.de.sii.group</a></p>\n</div>\n</div>

Pretty messy, isn't it?

Here's how we do it¶

def parseHTML(document):

jd = document # jd = job document

all_texts = [[]]

all_headlines = []

if len(jd["headlines"])<=2:

for h in jd["htmls"]:

tree = html.fromstring(h)

liste = tree.xpath("//*")

liste = [l for l in liste if l != "\xa0"]

for l in liste:

if l.tag == "strong":

all_texts.append([])

if l.tag == "p":

if l.text_content() != "\xa0":

all_texts.append([l.text_content()])

elif l.tag == "li":

all_texts[-1].append(l.text_content())

elif l.tag == "h1" or l.tag=="h2" or l.tag=="h3":

all_texts.append([])

all_texts = [a for a in all_texts if a != [] and a != [''] and a != [' ']]

return all_texts, []

else:

return [jd["text"]], [jd["headlines"]]

As you can see, we basically look at specific HTML-tags to divide the content into parts. For documents where the number of headlines tell us, the text is already been partitioned ( len(jd["headlines"])<=2 ), we don't use the parsing method. This is he case for most documents.

Predicting function¶

So we now divided the job offer into the desired parts, we now want to know, what these parts are about. Remember we have 5 different labels:

- company: a description of the company ("About us"-page)

- tasks: a description of the job offered (especially the tasks of the job)

- profile: a description of the skills needed to fulfill the job's requirements ("Your profile")

- offer: a description of what the company offers for the applicant ("What to expect", "What we offer to you")

- contact: contact details for the applicant (also including a hint whom to adress in the application)

To perform these predictions we have to make the following steps:

- Count the lengths of documents (document = array partial texts)

- This way we can be able to transform the data properly (we'll get to this in detail in a following section)

- Get a 'hard' prediction from keyword analysis (we'll come to this later on as well)

- Compare the model predictions with the 'hard predictions

- We'll prefer the hard predictions because they give a clear answer

- We remove 'dirty' data

- in this case we have to look at JavaScript-elements we don't want to add to our clean data (fortunately these elements are all given in the same way)

- We calculate ratios of characters compared to the whole job offer for each text

- You'll see what that's useful for

- The last part is about bringing the documents into the right order

def getParts(documents, headlines):

length_document = sum([len(a) for jd in documents for a in jd])

result = {"company":[],"tasks":[],"profile":[],"offer":[],"contact":[]}

for i in range(0,len(documents)):

all_d = documents[i]

from_headline = []

if len(documents) == len(headlines) and len(all_d) == len(headlines[i]):

all_h = headlines[i]

from_headline = getPartFromHeadline(all_h)

predictions_part = model.predict(cleanText(all_d))

if len(from_headline) > 0:

#print("Original:",predictions_part)

predictions_part = [pred if head == "" else head for pred,head in zip(predictions_part,from_headline)]

#print("New:", predictions_part)

#print("\n")

length_part = sum([len(a) for a in all_d])

ratios = []

for i,d in enumerate(all_d):

# That's if we delete the text

minus = 0

if "var tag = document.createElement(\'script\')" in d:

minus = len(all_d[i])

all_d[i] = ""

d = ""

length_document = sum([len(a) for jd in documents for a in jd])

ratio_document = len(d)/length_document-minus

ratio_part = len(d)/length_part

ratio_part_normalized = len(d)/length_part*len(all_d)

ratios.append([ratio_document,ratio_part,ratio_part_normalized])

if len(all_d) > 1:

for l,r in makeOne(all_d,predictions_part,ratios).items():

result[l].extend(r)

else:

result[predictions_part[0]].extend(all_d)

return result

Keyword detection function¶

This function exists to match certain keywords of the job offer to specific headlines. These keywords give us a 100 %-prediction so we cross-check the result (if there is one) with our model's prediction. The certainty of this prediction will always be higher so we replace the original prediction with this one.

def getPartFromHeadline(headlines):

headlines = [h.lower() for h in headlines]

matched = [""]*len(headlines)

matcher = OrderedDict({

"tasks" : ["aufgabe","tasks","tätigkeitsbeschreibung","ihre herausforderungen","was sie bei uns bewirken","sie werden","wofür wir sie suchen","stellenprofil","stellenkurzprofil","tätigkeitsprofil","jobprofil","das zeichnet sie aus"],

"offer" : ["wir bieten","benefits","vorteile","unser angebot","was wir ihnen bieten","das bieten wir ihnen","das bieten wir dir","das erwartet sie bei uns","warum sie bei uns genau richtig sind","we offer","was sie erwartet","arbeiten bei","werden sie teil","das erwartet sie","was wir dir bieten","mehrwert","perspektive","ihre chance","was erwartet sie","warum sie zu uns kommen sollten","das bieten wir dir","this location offers","bei uns erwartet","dich erwartet","bieten wir"],

"contact" : ["kontakt","contact","interessiert","ansprechpartner","interesse","bewerbung","zusätzliche informationen"],

"company" : ["firmenprofil","über uns","about","wer wir sind","introduction","einleitung","willkommen","welcome","sind wir","unternehmen","wir sind"],

"profile" : ["qualifikation","das bringst du mit","anforderungen","das bringen sie mit","sie haben","mitbringen","profil","sie bringen mit","voraussetzungen","unsere erwartungen","wir erwarten"]

})

for h,ms in matcher.items():

for i in range(0,len(headlines)):

for m in ms:

if m in headlines[i]:

matched[i] = h

return matched

Transform function¶

The task of this function is simple. We want to take our prediction for each sentence or list item we got from our prediction and merge it into one ordered object.

So what are we going to do?

We count the appearances of each label for each document. There are two possible outcomes for documents:

- If the document contains one label for 70% or more, we return the whole document with that one label

- If other labels have a higher share than 30% all together, we split the document into the predicted labels and return it as a whole

def makeOne(rows, preds, percentages, min_percent=0.7):

# Saving the counts for each label

each_label = {"company":0,"tasks":0,"profile":0,"offer":0,"contact":0}

each_label_occurence = {"company":0,"tasks":0,"profile":0,"offer":0,"contact":0}

each_label_doc = {"company":0,"tasks":0,"profile":0,"offer":0,"contact":0}

result = {"company":[],"tasks":[],"profile":[],"offer":[],"contact":[]}

for i,pred in enumerate(preds):

each_label[pred] += percentages[i][1]

each_label_occurence[pred] += 1/len(preds)

for l,p in each_label.items():

if (p + each_label_occurence[l]*2)/3 >= min_percent:

return {l:rows}

else:

for r,pred in zip(rows,preds):

if r not in result[pred]:

result[pred].append(r)

return result

2. Get the transformation started¶

We now have defined the necessary functions to perform the transformation of our data. The outcome is a list holding the text for each label we defined for our job offers. I tell the function getParts() to print out the predictions of the model compared to the 'hard' prediction by keywords.

For this case, I will set the maximum iteration for the dataset to 100.

## Start

all_job_descriptions,headlines = [],[]

for i,jo in job_offers[:100].iterrows():

parsed = parseHTML(jo)

all_job_descriptions.append(parsed[0])

headlines.append(parsed[1])

results = pd.DataFrame()

counter = 0

for documents,headlines in zip(all_job_descriptions,headlines):

counter += 1

results = results.append(getParts(documents,headlines),ignore_index = True)

results.head(20)

Result¶

As you can see, there are still some job offers containing empty labels. If you look closer, you'll see that most of them are in English language. This of course makes it harder for our prediction model to correctly identify parts. If we go further into the details of each part, we can just throw out English texts by using part-of-speech tagging (these will give us errors).

Further analysis¶

For further analysis of job offers, we are going to save the new dataset after merging our transformed dataset with the old one. I'm also going to save different parts as a textfile for manually labelling. This will help to classify the data for more insights.

job_offers = job_offers.rename(columns = {"company": "company_name"})

job_offers = job_offers.merge(results, left_index = True, right_index = True)

job_offers.info()

import io

with io.open('../Task_Classification/tasks.txt', 'w', encoding="UTF8") as outfile:

for jo in job_offers.iterrows():

outfile.write("".join(jo[1]["tasks"]))

with io.open('../Task_Classification/profile.txt', 'w', encoding="UTF8") as outfile:

for jo in job_offers.iterrows():

outfile.write("".join(jo[1]["profile"]))

jo_json = job_offers.to_json()

with open('../Task_Classification/job_offers.json', 'w') as outfile:

json.dump(jo_json, outfile)